A Phd Scholarship in Mathematical Optimisation is available working with Dr. Scott B. Lindstrom at Centre for Optimisation and Decision Science, Curtin University. Please share this opportunity with any students who may be interested.

Eligibility

You must be one of the following:

- Australian Citizen

- Australian Permanent Resident

- New Zealand Citizen

- Permanent Humanitarian Visa

Deadline: no expressions of interest will be accepted after 25th August, 2023. This is a University deadline for all projects in this category, and it cannot be extended. Would-be applicants are strongly encouraged to submit their expressions of interest well in advance of this deadline.

Overview

The annual scholarship package (stipend and tuition fees) is approx. $60,000 – $70,000 p.a.

Successful HDR applicants for admission will receive a 100% fee offset for up to 4 years, stipend scholarships at the 2023 RTP rate valued at $32,250 p.a. for up to a maximum of 3 years, with a possible 6-month completion scholarship. Applicants are determined via a competitive selection process and will be notified of the scholarship outcome in November 2023.

The official advertisement for the position is here, and more information is below.

For detailed information about RTP Scholarships, visit: Research Training Program (RTP) Scholarships | Curtin University, Perth, Australia.

Description

In data science, machine learning, and engineering, many problems take the form of finding a solution that minimizes a cost, subject to constraints on allowable solutions. Some examples of costs include expected financial losses, model prediction errors, and energy used. Some examples of constraints include resource limitations, minimum requirements on what is produced, and so forth.

These problems are solved with operator splitting methods, a modern class of non-linear optimisation algorithms that allow the constraint structure and cost structure to be treated as two components of a single unifying function. These algorithms were independently discovered by mathematicians working on physics and imaging problems, and they have been developed and improved with the powerful machinery of convex analysis.

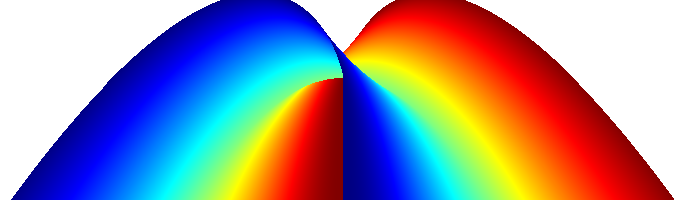

For many important problems, we desire to make these algorithms go faster, either to find solutions within the maximum time allowable (for example: balancing power flow in electricity grids) or to make better data science models computationally tractable for large data sets. Researchers have recently turned to studying the dynamical systems associated with operator splitting methods. This research is allowing us to prove results in nonconvex settings and build new algorithms. Dr. Scott Lindstrom recently introduced a meta-algorithm that uses operator dynamics to suggest alternative algorithm updates. The intent of this meta-algorithm is to solve surrogates for something called a Lyapunov function, which is an object that describes the dynamics. This meta-algorithm has already become state-of-the-art for finding wavelets with structural constraints (an imaging sciences problem).

Scientific Aims: The scientific aim of this project is to identify classes of problems in data science, machine learning, and engineering for which meta-algorithms—such as the one described above—may be deliver superior performance. The approach will be multi-faceted, combining both computational experiment and rigorous proof. The results will be communicated in articles and submitted to peer-reviewed publications.

Upskilling Aims: The upskilling aims for the selected candidate are as follows (in no particular order). The candidate will build expertise in the algorithms that make it possible to solve many modern data science models and engineering problems, understanding both how the algorithms are designed, how geometry informs model selection, and what the outstanding challenges are. At the project’s completion, the candidate will be competent to rigorously conduct both experimental and theoretical mathematics research, and to communicate the results of their discoveries to others in the field.

In the literature review component, you will learn the fundamental theory—convex analysis—of operator splitting and learn how operator splitting algorithms are formulated for solving various classes of problems. Some examples of the types of problems you will study are as follows: (1) least absolute deviations for outlier-resistant linear regression (a data science modelling problem), (2) progressive hedging for maximizing expected earnings (a finance problem), (3) computation of a one-norm centroid (a statistics problem), and (4) phase retrieval (a signal processing problem).

In the experimental component, you will apply Lyapunov surrogate methods to solve those problems. You will build performance profiles, which are visualizations that allow researchers to compare the speeds of different algorithms.

In the theoretical component, you will formally analyse the dynamical systems associated with operator splitting methods when they are applied to these problem classes. Particular emphasis will be placed on the duality of algorithms; duality is a fundamental concept in convex analysis.

You will document and communicate the findings in written articles.

Background: In 2015, Australian researchers Jonathan M. Borwein and Brailey Sims seeded the study of dynamical systems for operator splitting methods. This has rapidly grown into an expansive field in its own right. A Google Scholar search for “Dynamical Systems and ADMM” returns 17,100 results in the last 8 years, with applications including distributed DC optimal power flow. The principal of this project, Dr. Scott Lindstrom, worked extensively with Borwein and Sims, and was one of the 41 invited participants at the 2017 Banff International Research Station workshop for the world’s leading experts on splitting algorithms. Together with Walaa Moursi (Waterloo) and Matthew K. Tam (Melbourne), he is co-organizing the 2025 Australian Matrix workshop on operator splitting.

Dr. Lindstrom’s article introducing the Lyapunov Surrogate method published in Computational Optimization and Applications, a top journal in the field of optimization. The meta-algorithm has already been demonstrated state-of-the-art for finding structured wavelets, in research with Dr.s Lindstrom, Neil Dizon (U. Helsinki) and Jeffrey Hogan (U. Newcastle).

Future context: As described in the overview, in the here and now, faster operator splitting methods will allow us to obtain better solutions to important problems in data science and energy. On a ten year horizon, this research advances an emerging new paradigm in problem solving, where artificial intelligence will observe an algorithm’s performance and suggest on-the-fly parameter adjustments and alternative updates for the iterates. Finally, the project builds fundamental knowledge in the mathematical sciences and equips the selected candidate with a skill set of extreme contemporary demand.

Internship Opportunities: This project may provide an internship opportunity.

Interested applicants: should submit the expression of interest form on this page. Questions may be directed to Dr. Scott B. Lindstrom ( scott.lindstrom@curtin.edu.au ).