VA & Opt Webinar: Russell Luke

Title: Inconsistent Stochastic Feasibility: the Case of Stochastic Tomography

Speaker: Russell Luke (University of Göttingen)

Date and Time: April 7th, 2021, 17:00 AEST (Register here for remote connection via Zoom)

In an X-FEL experiment, high-energy x-ray pulses are shot with high repetition rates on a stream of identical single biomolecules and the scattered photons are recorded on a pixelized detector. These experiments provide a new and unique route to macromolecular structure determination at room temperature, without the need for crystallization, and at low material usage. The main challenges in these experiments are the extremely low signal-to-noise ratio due to the very low expected photon count per scattering image (10-50) and the unknown orientation of the molecules in each scattering image.

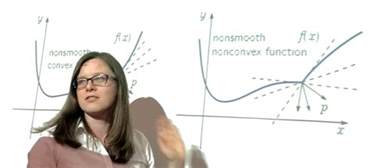

Mathematically, this is a stochastic computed tomography problem where the goal is to reconstruct a three-dimensional object from noisy two-dimensional images of a nonlinear mapping whose orientation relative to the object is both random and unobservable. The idea is to develop of a two-step procedure for solving this problem. In the first step, we numerically compute a probability distribution associated with the observed patterns (taken together) as the stationary measure of a Markov chain whose generator is constructed from the individual observations. Correlation in the data and other a priori information is used to further constrain the problem and accelerate convergence to a stationary measure. With the stationary measure in hand, the second step involves solving a phase retrieval problem for the mean electron density relative to a fixed reference orientation.

The focus of this talk is conceptual, and involves re-envisioning projection algorithms as Markov chains. We already present some new routes to “old” results, and a fundamental new approach to understanding and accounting for numerical computation on conventional computers.