VA & Opt Webinar: Yboon Garcia Ramos

Title: Characterizing quasiconvexity of the pointwise infimum of a family of arbitrary translations of quasiconvex functions

Speaker: Yboon Garcia Ramos (Universidad del Pacífico)

Date and Time: March 31st, 2021, 11:00 AEDT (Register here for remote connection via Zoom)

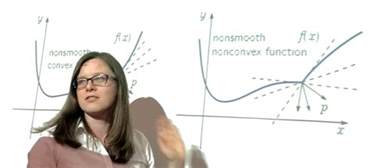

Abstract: In this talk we will present some results concerning the problem of preserving quasiconvexity when summing up quasiconvex functions and we will relate it to the problem of preserving quasiconvexity when taking the infimum of a family of quasiconvex functions. To develop our study, the notion of quasiconvex family is introduced, and we establish various characterizations of such a concept.

Joint work with Fabián Flores, Universidad de Concepción and Nicolas Hadjisavvas, University of the Aegean.